Mathematics

Most mathematical sequences are inherently unmusical. The digits of pi are too random, and primes extend beyond the range of a piano keyboard after 23 notes. Therefore, sequences need to either have an inherently restrictive range, or we must interpret the numbers in a less literal fashion.

In "8 Bits of Grey" we rewrite the sequence in binary to see which bit changes, and use that to indicate which pairs of notes should be transposed from a pre-determined melody. The pitch and duration of each note in that melody has been defined by a human.

We can therefore generate a continuously changing melody that always sounds similar to its starting point, despite every bar being unique.

Taken from the "Sneak Thief" EP.

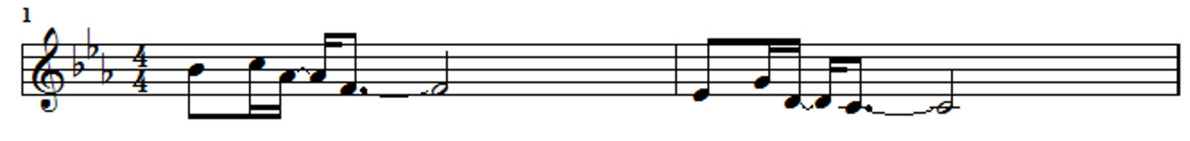

Fibonnaci numbers are normally presented as 1,1,2,3,5,8,13,21,... But what if you go backwards? Then you get 1,1,0,-1,1,-2,3,-5,8,... The numerics are identical, but switch in sign, which can be interpreted as absolute values around a pivot note, or relative to the previous number - making a meta-Fibonnaci sequence. Part 3 explores this idea. Previous compositions on this EP demonstrate what happens when the relative duration of notes approach the golden ratio.

Taken from the "Für Arvo" EP.

AI and more

This work is my first to include AI-generated lyrics, voice-cloning, and other trendy technologies!

It is a blatant homage to music pioneers, Kraftwerk. They often described themselves as 'workers' of the music, so I attempted to push the idea to its logical conclusion and allow A.I. to govern the work they are given. Of course, the band were unavailable to take part in this experiment, so I worked with the A.I. - completely in the style of Kraftwerk - to write something they might have released during their 1974-1986 'classic period.' In doing so I explored the dividing line between man, machine, and A.I.

The video was built by human hands and minds.

Taken from the "Co-Written" EP.

This piece is a combination of composed melody, electronics, and noise-led soundscape.

The piano and strings began as a basic leitmotif, expanded and developed by an AI. The electronica is human-composed, taking inspiration from the AI. And finally, the noise was the result of tweaking an algorithm to generated fluctuating and intersecting sine waves of notes. (let val = Math.sin(i/10+t*2.8+(i%3/3)*Math.PI)*0.1+Math.sin(i/10-t*2.8)*0.02+0.5, since you asked!) After finding the resultant notes were unmusical (with any phrasing, or pitch mapping) a human slowed them down and assigned a suitably disconcerting sound patch, to reflect the confusion of the narrator.

Taken from the "Short Stories" EP.

SciArt

In this example piece, there are two components to the track - the planets, and the cosmos.

The planets are represented by the pulsating bass drones, each moving in and out of the listeners perception according to the duration of their respective orbits. Only the first six planets are considered (that is, Mercury, Venus, Earth, Mars, Jupiter, and Saturn) since to include Uranus and Neptune would require a *much* longer piece of music for their influence to be heard.

Furthermore, the pitch of these notes matches that of the planet itself, albeit in a significantly different octave!

Secondly, the cosmos appears courtesy of sounds from the Halley VLF receiver. These are spherics, whistlers and chorus which originate from lightning strikes and the like. They come courtesy of Nigel Meredith, a Space Weather Research Scientist at the British Antarctic Survey.

Windswept No. 3 is inspired by Phyllis Wager's typewriter in the collection of the Polar Museum.

It was created for the University of Cambridge Museums, as part of the Museum Remix: Unheard project, 2020.

Minimalism

Restrictions are freeing. In this instance, I decided to write a film score.

I decided to use one synthesizer.

The synthesizer in question can play only one note at a time.

Interactive

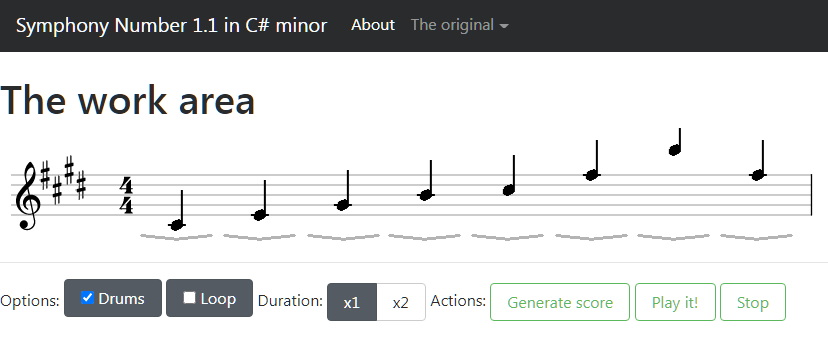

The musical output of many systems depend on the algorithm and the input data. In the above cases, the algorithm and data are both tweaked (by either a human or an AI) until it produces a pleasing result.

But in some cases the algorithm can be created in such a way that the input data is restricted in a casual way, permitting members of the public to create their own work.

This example was an extension of the "Fractal" piece composed for "Symphony Number 1 in C# minor" and known as "Symphony 1.1".

Launch Symphony 1.1 in a new window

Using commodity hardware, like the Leap Motion you can control sound by simply waving your hands in the air. I used to develop a basic soft synth called Slytherin.

Other projects include the Strummer, which allow you to simulate the plucking of guitar strings, when using a keyboard instrument.

I have also created haptic instruments, Arduino musicians, and custom MIDI controllers, alongside a range of amusing software hacks. Only some of these can be found on my personal page as 'The Music of The Marquis de Geek'.

Listen:

Here you can explore selections of my work. If you find them interesting, inspiring, or amusing in any way, please consider supporting the art and buy a digital copy.

Learn:

As an active speaker on the tech circuit, I often present on a wide range of computer-related topics. It is is less common for me to discuss my art. This presentation, "How I Wrote and Recorded an Algorithmic Symphony", at Skills Matter is a rare example, where cover the process of generating MIDI files algorithmically, assigning sounds, resulting in an understanding on how you can turn an abstract idea into music.

About Me

As an aspiring polymath, this site represents the electronic music elements of my career, where it crosses over with that of a technologist.

To learn about my other endeavors, please look my Linkedin profile for a full breakdown of my career.

Need help?

If you need help building algorithmic compositions, composing film scores, or creating display music, then get in touch!